- #USED AN INTRODUCTION TO STATISTICAL LEARNING MANUAL FOR STATISTICAL#

- #USED AN INTRODUCTION TO STATISTICAL LEARNING SOFTWARE ENGINEERS AS#

- #USED AN INTRODUCTION TO STATISTICAL LEARNING HOW TO IMPLEMENT CUTTING#

Used An Introduction To Statistical Learning Software Engineers As

3 Describe three research methods commonly used in behavioral science.Bloomberg presents "Foundations of Machine Learning," a training course that was initially delivered internally to the company's software engineers as part of its "Machine Learning EDU" initiative. 2 Explain how samples and populations, as well as a sample statistic and population parameter, differ. Introduction to CHAPTER1 Statistics LEARNING OBJECTIVES After reading this chapter, you should be able to: 1 Distinguish between descriptive and inferential statistics.

Used An Introduction To Statistical Learning How To Implement Cutting

Used An Introduction To Statistical Learning Manual For Statistical

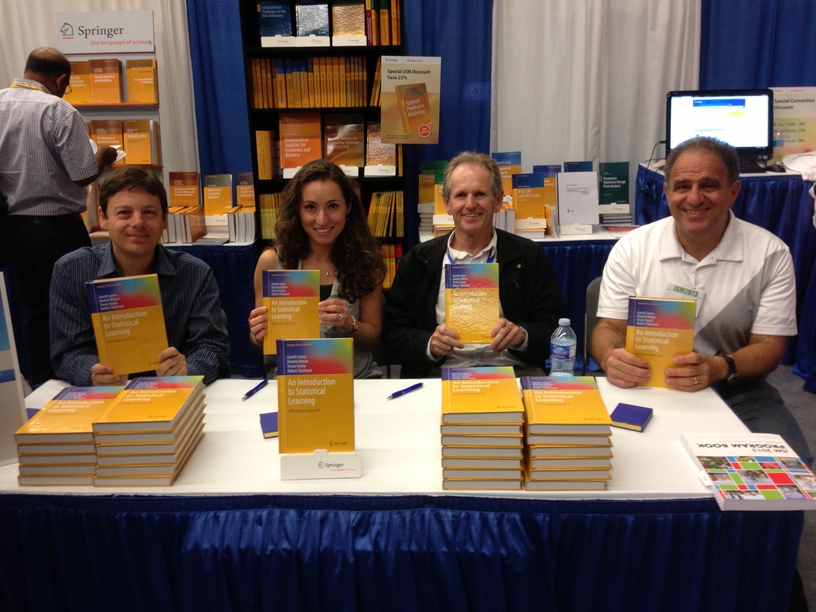

The book has been translated into Chinese, Italian, Japanese, Korean.The 30 lectures in the course are embedded below, but may also be viewed in this YouTube playlist. Inspired by 'The Elements of Statistical Learning'' (Hastie, Tibshirani and Friedman), this book provides clear and intuitive guidance on how to implement cutting edge statistical and machine learning methods.This book is appropriate for anyone who wishes to use contemporary tools for data analysis. 2.3 Assessing Model Accuracy Every data set is different and there is no one statistical learning method that works best for all data sets.'An Introduction to Statistical Learning (ISL)' by James, Witten, Hastie and Tibshirani is the 'how to'' manual for statistical learning. It is designed to make valuable machine learning skills more accessible to individuals with a strong math background, including software developers, experimental scientists, engineers and financial professionals.The more important part of choosing an appropriate statistical learning method is the type of the response variable. The primary goal of the class is to help participants gain a deep understanding of the concepts, techniques and mathematical frameworks used by experts in machine learning.

Requires the use of outlier detection, imputation and more.An Introduction to Statistical Learning with (PDF) An Introduction to Statistical Learning with bowen zhao - Academia.edu Academia.edu no longer supports Internet Explorer.Please fill out this short online form to register for access to our course's Piazza discussion board. Data Understanding: Requires the use of summary statistics and data visualization. Problem Framing: Requires the use of exploratory data analysis and data mining. This course also serves as a foundation on which more specialized courses and further independent study can build.Below are 10 examples of where statistical methods are used in an applied machine learning project.

Solid mathematical background, equivalent to a 1-semester undergraduate course in each of the following: linear algebra, multivariate differential calculus, probability theory, and statistics. PrerequisitesThe quickest way to see if the mathematics level of the course is for you is to take a look at this mathematics assessment, which is a preview of some of the math concepts that show up in the first part of the course. Common questions from this and previous editions of the course are posted in our FAQ.The first lecture, Black Box Machine Learning, gives a quick start introduction to practical machine learning and only requires familiarity with basic programming concepts. You should receive an email directly from Piazza when you are registered.

(JWHT) refers to James, Witten, Hastie, and Tibshirani's book An Introduction to Statistical LearningWith the abundance of well-documented machine learning (ML) libraries, programmers can now "do" some ML, without any understanding of how things are working. (SSBD) refers to Shalev-Shwartz and Ben-David's book Understanding Machine Learning: From Theory to Algorithms (HTF) refers to Hastie, Tibshirani, and Friedman's book The Elements of Statistical Learning Recommended: Computer science background up to a "data structures and algorithms" course Recommended: At least one advanced, proof-based mathematics course Python programming required for most homework assignments.

We present our first machine learning method: empirical risk minimization. We introduce some of the core building blocks and concepts that we will use throughout the remainder of this course: input space, action space, outcome space, prediction functions, loss functions, and hypothesis spaces. Introduction to Statistical Learning TheoryThis is where our "deep study" of machine learning begins.

Directional Derivatives and Approximation (Short)We introduce the notions of approximation error, estimation error, and optimization error. In this lecture we cover stochastic gradient descent, which is today's standard optimization method for large-scale machine learning problems. To do learning, we need to do optimization. Empirical risk minimization was our first example of this.

When L1 and L2 regularization are applied to linear least squares, we get "lasso" and "ridge" regression, respectively. We discuss the equivalence of the penalization and constraint forms of regularization (see Hwk 4 Problem 8), and we introduce L1 and L2 regularization, the two most important forms of regularization for linear models. In particular, these concepts will help us understand why "better" optimization methods (such as quasi-Newton methods) may not find prediction functions that generalize better, despite finding better optima.We introduce "regularization", our main defense against overfitting.

Zou and Hastie's Elastic Net Paper (2005) (Credit to Brett Bernstein for the excellent graphics.) Finally, we introduce the "elastic net", a combination of L1 and L2 regularization, which ameliorates the instability of L1 while still allowing for sparsity in the solution. We will see that ridge solutions tend to spread weight equally among highly correlated features, while lasso solutions may be unstable in the case of highly correlated features. When applied to the lasso objective function, coordinate descent takes a particularly clean form and is known as the "shooting algorithm".We continue our discussion of ridge and lasso regression by focusing on the case of correlated features, which is a common occurrence in machine learning practice. Finally, we present "coordinate descent", our second major approach to optimization.

Next, we introduce our approach to the classification setting, introducing the notions of score, margin, and margin-based loss functions. We consider them as alternatives to the square loss that are more robust to outliers. Loss Functions for Regression and ClassificationWe start by discussing absolute loss and Huber loss.

We discuss weak and strong duality, Slater's constraint qualifications, and we derive the complementary slackness conditions. Lagrangian Duality and Convex OptimizationWe introduce the basics of convex optimization and Lagrangian duality. The interplay between the loss function we use for training and the properties of the prediction function we end up with is a theme we will return to several times during the course.9.

2) Strong duality is a sufficient condition for the equivalence between the penalty and constraint forms of regularization (see Hwk 4 Problem 8).This mathematically intense lecture may be safely skipped.

0 kommentar(er)

0 kommentar(er)